We will use the following VPP filters:

- CSIR VPP Scale Filter

- CSIR VPP H264 Encoder

- CSIR VPP RTSP Sink Filter

- CSIR VPP RTSP Source Filter

You will also need GraphEdit, GraphStudio or GraphStudioNext (recommended) to construct the graphs.

The VPP RTSP filters use the Live555 Streaming Media libraries which implement the RTSP/RTP/RTCP stacks.

|

| Figure 1: RTSP Sink Graph |

It is important to limit the source resolution (either via IAMStreamConfig or via the VPP Scale Filter ) and framerate (either via IAMStreamConfig or via the VPP Frame Skipping Filter) as this affects both the bitrate and the time required to encode the image. The current VPP Encoder can comfortably encode CIF video at 15 frames a second when in Mode 1. Mode 1 means that the encoder limits the bits per frame to the specified Frame Bit Limit parameter. In this example we have selected 5000 bits per frame. At e.g. 10 frames a second, this equals 50kb/s. Note that the filters must be configured before the graph is played.

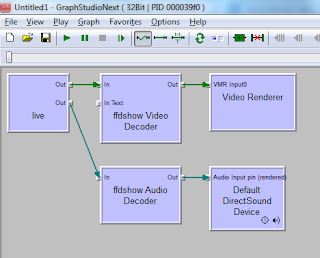

Once the graph is played, start another instance of GraphStudioNext.

|

| Figure 2: RTSP Source Graph |

|

| Figure 3: Rendered RTSP Source Graph |

|

| Figure 4: RTSP with VLC |

When this parameter is too small, VLC struggles to synchronise the audio and video resulting in many frames being discarded. This can be seen on the logging window if the verbosity is high enough.